The Ultimate Head-to-Head OVP Testing Toolkit

Introduction:

Your video testing toolkit

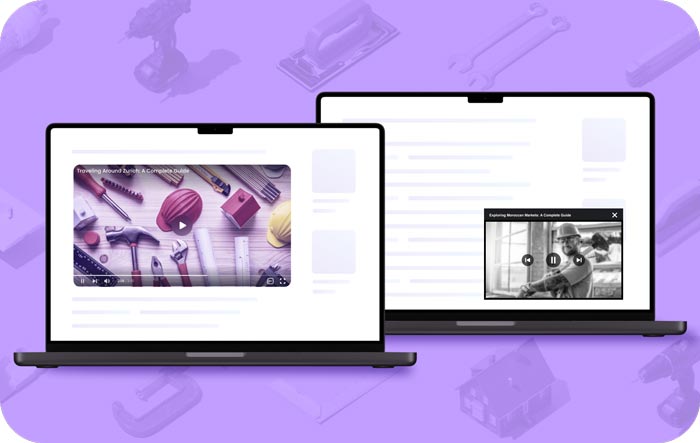

Online video represents the highest-value real estate on your website, with a single video player capable of driving 25% of total page revenue. Yet, despite widespread adoption, most publishers still struggle to unlock video’s full potential. While 80% of publishers now use video extensively, only 23% rate their video experience as “good” or better. Selecting the right online video platform (OVP) is crucial — but how do you evaluate the growing field of vendors and technologies?

Running a head-to-head test of OVPs promises clear answers, but the process is far more complex than it may sound. Beyond simply comparing revenue numbers, a proper evaluation requires:

- Coordinated effort across multiple departments

- Careful technical setup to ensure fair comparison

- Several weeks of sustained project momentum

- Protection against tactics that could hurt site health

A poorly designed test can leave you worse off than before — misled by skewed data or with damaged inventory quality that takes months to repair. Publishers must be strategic in both when they decide to test and how they execute these experiments.

Think of this guide as your ultimate OVP testing toolkit. We provide precise measuring instruments to gauge true performance, leveling tools to ensure a fair traffic comparisons, diagnostic frameworks to identify issues early, and much more.

Each section offers battle-tested tools and techniques to help you build a winning evaluation plan. Whether you’re replacing an incumbent vendor or exploring new video capabilities, this toolkit will help you make a confident, well-informed choice.

Why we’re focusing on head-to-head tests

Video isn’t just another feature on your website — it’s a fundamental piece of your digital strategy. The right OVP shapes how you build new revenue streams, architect engaging viewer experiences, and engineer innovative content strategies.

Testing multiple vendors can help inform this critical decision by separating genuine partners from showy (or shady) imposters. But, without proper setup and execution, an evaluation period can lead to misleading results, or worse, damage to your site’s inventory quality and reputation, hindering long-term monetization.

While researching video platforms, publishers typically encounter two types of evaluations:

![]() Trials or pilots

Trials or pilots

A 60 to 90-day evaluation period of a single vendor to validate performance, workflow fit, and technical implementation before making a longer commitment.

![]() Head-to-head tests

Head-to-head tests

Running multiple vendors simultaneously to compare performance when replacing an incumbent, evaluating newer market solutions, or looking to consolidate vendors.

Head-to-head evaluations provide the clearest comparison of how different solutions perform with your specific content, audience, and monetization needs. However, with multiple vendors comes greater complexity in ensuring a fair playing field. This guide helps you make the most of this investment to build towards an answer you can trust.

Before you test:

3 diagnostic questions

1. Do you really need to test?

The first crucial question to consider is whether you really need to test at all. Before proceeding, make sure you meet the basic technical requirements: a minimum traffic volume of at least 100,000 daily player loads, and fractional resources for support across IT, ad operations, product, and analytics.

Then, consider if you might still avoid the time and expense of a head-to-head test by identifying a clear leader through other evaluation methods, such as:

![]() Request for proposals

Request for proposals

A thorough RFP process can help qualify vendors across detailed requirements and use cases

![]() Peer recommendations

Peer recommendations

Learn from other publishers’ experiences through peer reviews, case studies, and customer references.

![]() Direct vendor evaluation

Direct vendor evaluation

Run a proof of concept with a single preferred vendor to validate their capabilities.

| Evaluation type | Best for | Duration | Resource needs |

|---|---|---|---|

| Head-to-head test | Replacing incumbent vendor Evaluating multiple new solutions Validating performance claims |

4–6 weeks | High: cross-team coordination |

| Single vendor trial | Testing specific capabilities Proof of concept Technical validation |

60–90 days | Medium: focused on key stakeholders |

| RFP process | Market scanning Initial vendor screening Budget planning |

2–4 weeks | Medium: mainly procurement team |

2. What is the scope of your test?

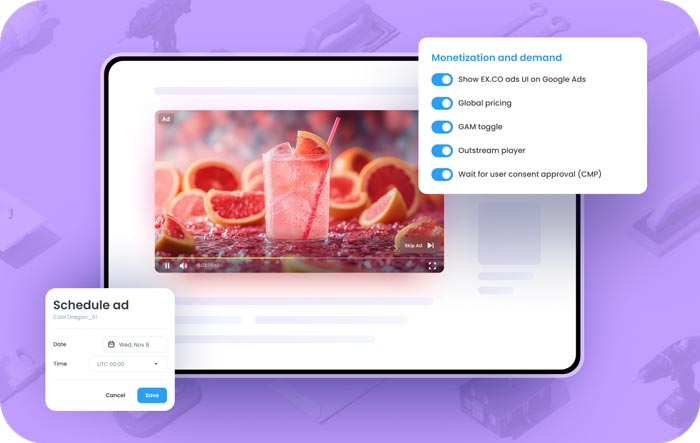

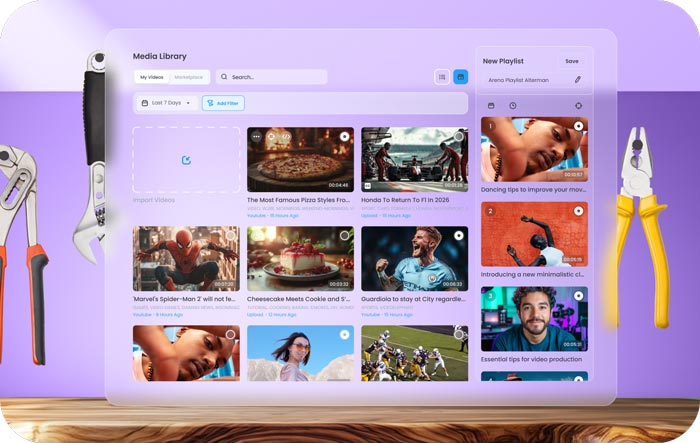

For any test to succeed, you need a clear idea of what features and solution types you’re testing. In the world of online video, these considerations might be:

- Pure ad monetization (e.g. outstream units)

- Full OVP capabilities, including content management

- Specific features like AI-powered video recommendations

- Access to premium content

- Dynamic playlist creation

- Advanced demand capabilities

- Customer service

Make sure each test is focused on the same set of solutions. Comparing a full OVP, for example, against a simple outstream ad unit will rarely yield useful insights. Also be intentional about the number of vendors involved. While testing two or three platforms is common, some publishers test up to five simultaneously which may not garner meaningful results.

3. Which stakeholders should get involved?

The scope of your test will determine which teams to get involved, and at which levels of engagement. While “monetization” is the top video priority for 59% of publishers, the decision makers involved in video strategy are evenly spread across revenue, editorial, and product.

Assign a primary test coordinator to manage communication between these groups and incorporate all perspectives into the final evaluation. Editors will have valuable input on content workflows, tagging, and playlist creation, while product managers can monitor technical indicators like page performance and API flexibility.

At the workbench:

Ensuring a ‘level’ testing field

Just as carpenters require a level surface to build objects that last, publishers need even testing conditions to get results they can trust. Poorly executed evaluations not only leave you misinformed, but can incur long-lasting negative impacts on your business. Consider one major news publisher’s costly lesson:

Eager to boost revenue, they rushed through a two-week evaluation without proper controls or setup. The winning vendor appeared to deliver superior results, but achieved this through aggressive ad calling practices that violated industry standards. Within months, the publisher’s inventory was flagged as “cheap reach” by Jounce Media, a designation that took six months to overcome and cost them relationships with premium advertisers. What seemed like a quick win led to significant long-term damage.

This publisher’s experience illustrates why proper test setup is crucial. A vendor that hammers a test with aggressive monetization tactics can lead to:

![]() Your inventory being blocked by the buy side or flagged by industry watchdogs

Your inventory being blocked by the buy side or flagged by industry watchdogs

![]() SSPs and DSPs throttling traffic due to poor ad-calling practices

SSPs and DSPs throttling traffic due to poor ad-calling practices

![]() Lower site health scores that take months to recover from testing damage

Lower site health scores that take months to recover from testing damage

Here are the key areas you’ll need to calibrate for a proper evaluation.

Minimum test duration

A proper test requires a minimum of four to six weeks of live evaluation, including up to three additional weeks upfront for initial setup and optimization. Don’t be tempted to make quick decisions based on early results. The first five days of performance data will fluctuate while yield algorithms calibrate and machine-learning optimization tests multiple scenarios.

Ideal OVP Testing Timeline

Balanced traffic breakdown

Test activity must be carefully balanced, with an even 50/50 traffic split between vendors. Anything less creates an uneven comparison. Your test traffic should reflect your actual website distribution. For example, if 40% of your traffic is from the US, maintain that ratio in your test. The same principle applies to geographic distribution and operations distribution.

Equal SSP integrations

All vendors should have access to the same supply-side platforms (SSPs) for your demand sources. But, connection alone isn’t enough. Verify actual response rates from SSPs, as some vendors may have better SSP relationships affecting performance. Think of this as checking not just whether a power tool is plugged in, but if it’s receiving the right voltage.

Common ad-serving standards

Ad serving standards act as your testing guardrails, ensuring fair play in how ads are delivered:

![]() Frequency

Frequency

Maintain the same ad frequency settings and refresh intervals, ensuring no vendor is making excessive calls at faster intervals that could harm site performance.

![]() Viewability

Viewability

Only play ads in-view, as players that continue showing ads when closed or out of view lead to artificially inflated revenue (and lower monetization over the long term).

![]() Integrations

Integrations

Vendors should call each SSP only once per auction; multiple calls may temporarily inflate fill rates, and can lead to throttling and negative site health scores.

Identical player configurations

Video player settings must be identical across vendors, including player location and content types (if coming from recirculated publisher content). Consider adding a negative interaction option as a user experience quality indicator, providing valuable feedback to revenue and editorial teams about how viewers interact with different solutions.

Price floor management

Ensure all vendors are using the same minimum price floors for US and international traffic, and watch for any unexpected floor changes. Be aware, some vendors might artificially lower price floors to win tests. While lower floors may boost short-term revenue, they can seriously damage long-term inventory value, like sacrificing structural integrity for immediate cost savings.

Data definition alignment

Before starting your test, ensure all parties agree on key metric definitions, such as page load measurement, revenue attribution, and user splits across geography, operating system, and domain. Establish clear success criteria and KPIs for determining a “test won” status, prioritizing comparative metrics such as effective revenue per mille (RPM), as detailed below. Looking at revenue alone can present a misleading picture, as even a slight difference in page loads can lead to significant distortions in perceived monetization abilities.

Revenue ÷ Player Loads × 1000 = Effective RPM

Pre-launch technical verification

- Player placement standardized

- Ad refresh intervals aligned

- Price floors documented

- SSP connections verified

- Analytics tracking configured

- Page performance baseline established

Post-test: How to measure and inspect results

When it’s time to assess your test results, publishers need precise measuring tools to evaluate engagement metrics, reliable gauges for ad performance, and a keen eye for quality inspection. Here’s how to ensure your analysis leads to sound conclusions:

Avoid historical comparisons

A common testing mistake is comparing current test results against historical data. This approach fails to account for crucial variables such as seasonality, industry changes, and campaign variations — all of which can make historical comparisons invalid. Even if a vendor shows better numbers than your previous month’s performance, these results don’t provide a meaningful comparison for choosing a particular OVP.

Assess the full revenue opportunity

When it comes to monetization, consider the complete financial picture, including OVP fee structures and incremental income from various demand sources. For example, a higher platform fee for one OVP may be a fraction of net-new revenue generated by its unique demand sources. Calculate total effective revenue after all fees to understand the overall impact on your bottom line.

Don’t discount secondary KPIs

While revenue is the priority in adopting video for 59% of publishers, secondary metrics provide crucial context for long-term success. A platform that performs well financially but creates workflow inefficiencies or degrades user experience may cost more in the long run. Remember that choosing an OVP impacts not just revenue but your entire digital strategy, including teams like product, editorial, ad ops, customer support, and more.

| Goals | KPIs | |

|---|---|---|

| 01 | Monetization |

|

| 02 | User experience |

|

| 03 | Scale |

|

| 04 | Technical |

|

| 05 | Customer service |

|

Selecting your power tool: A final checklist

Selecting your power tool: A final checklist

1. Performance assessment

- Calculate weighted revenue score

- Effective RPM = Revenue ÷ Player Loads × 1000

- Calculate weighted secondary KPI score

- Revenue — e.g., fill rate, viewability

- Editorial — e.g., duration, content relevancy

- Product — e.g., player look and feel, load time, UX quality

- Verify all vendors followed technical best practice

- SSP integration performance

- Platform stability metrics

- Page performance impact

- API/integration capabilities

- Security requirements met

- Check long-term health indicators

- DSP relationship status

- SSP relationship health

- Industry reputation check (ie: Jounce Report)

- Product roadmap alignment

- Review anomalies or outliers in test data

2. Stakeholder feedback

- Revenue team sign-off

- Editorial team workflow assessment

- Product team technical evaluation

- Sales/Marketing team input

- Engineering implementation feasibility review

3. Business terms review

- Total cost calculation (including all fees)

- Contract terms evaluation

- SLA and support provisions

- SLA and support provisions

- Exit terms

4. Implementation readiness

- Migration timeline created

- Content transition plan

- Team training schedule

- Monitoring framework defined

- Post-launch KPIs established

5. Final decision and next steps

- Compile all test results and stakeholder feedback

- Hold review meeting and make final vendor selection

- Brief impacted teams and schedule first trainings

- Kick off content migration and transition plan

Sharpen your strategy for video success

Like a master craftsperson’s workshop, your video strategy requires both the right tools and the expertise to use them effectively. A well-executed OVP test does more than just identify the best technology partner — it helps you build a deeper understanding of your video needs, audience engagement patterns, and revenue growth potential.

Modern OVPs are no longer simple video players but versatile Swiss Army knives of solutions, often spanning content management, AI/ML yield engines, demand generation, content marketplaces, and more. Using this testing toolkit, you can look beyond surface-level features and flashy demos to evaluate what matters most: real performance with your specific content, audience, and business model.

Remember that choosing an OVP isn’t just a technical decision — it’s a strategic investment in your digital future. If you decide to run a head-to-head evaluation, take the time to do it right, as the effort you invest will pay dividends long after implementation, helping you build a video strategy that delivers sustainable growth for years to come.

Ready to start

your OVP evaluation?

Schedule a consultation with our video experts.